The Infinite

Working with the infinite is tricky business. Zeno’s paradoxes first alerted Western philosophers to this in 450 B.C.E. when he argued that a fast runner such as Achilles has an infinite number of places to reach during the pursuit of a slower runner. Since then, there has been a struggle to understand how to use the notion of infinity in a coherent manner. This article concerns the significant and controversial role that the concepts of infinity and the infinite play in the disciplines of philosophy, physical science, and mathematics.

Philosophers want to know whether there is more than one coherent concept of infinity; which entities and properties are infinitely large, infinitely small, infinitely divisible, and infinitely numerous; and what arguments can justify answers one way or the other.

Here are some examples of these four different ways to be infinite. The density of matter at the center of a black hole is infinitely large. An electron is infinitely small. An hour is infinitely divisible. The integers are infinitely numerous. These four claims are ordered from most to least controversial, although all four have been challenged in the philosophical literature.

This article also explores a variety of other questions about the infinite. Is the infinite something indefinite and incomplete, or is it complete and definite? What did Thomas Aquinas mean when he said God is infinitely powerful? Was Gauss, who was one of the greatest mathematicians of all time, correct when he made the controversial remark that scientific theories involve infinities merely as idealizations and merely in order to make for easy applications of those theories, when in fact all physically real entities are finite? How did the invention of set theory change the meaning of the term “infinite”? What did Cantor mean when he said some infinities are smaller than others? Quine said the first three sizes of Cantor’s infinities are the only ones we have reason to believe in. Mathematical Platonists disagree with Quine. Who is correct? We shall see that there are deep connections among all these questions.

Table of Contents

- What “Infinity” Means

- Actual, Potential, and Transcendental Infinity

- The Rise of the Technical Terms

- Infinity and the Mind

- Infinity in Metaphysics

- Infinity in Physical Science

- Infinitely Small and Infinitely Divisible

- Singularities

- Idealization and Approximation

- Infinity in Cosmology

- Infinity in Mathematics

- Infinite Sums

- Infinitesimals and Hyperreals

- Mathematical Existence

- Zermelo-Fraenkel Set Theory

- The Axiom of Choice and the Continuum Hypothesis

- Infinity in Deductive Logic

- Finite and Infinite Axiomatizability

- Infinitely Long Formulas

- Infinitely Long Proofs

- Infinitely Many Truth Values

- Infinite Models

- Infinity and Truth

- Conclusion

- References and Further Reading

1. What “Infinity” Means

The term “the infinite” refers to whatever it is that the word “infinity” correctly applies to. For example, the infinite integers exist just in case there is an infinity of integers. We also speak of infinite quantities, but what does it mean to say a quantity is infinite? In 1851, Bernard Bolzano argued in The Paradoxes of the Infinite that, if a quantity is to be infinite, then the measure of that quantity also must be infinite. Bolzano’s point is that we need a clear concept of infinite number in order to have a clear concept of infinite quantity. This idea of Bolzano’s has led to a new way of speaking about infinity, as we shall see.

The term “infinite” can be used for many purposes. The logician Alfred Tarski used it for dramatic purposes when he spoke about trying to contact his wife in Nazi-occupied Poland in the early 1940s. He complained, “We have been sending each other an infinite number of letters. They all disappear somewhere on the way. As far as I know, my wife has received only one letter” (Feferman 2004, p. 137). Although the meaning of a term is intimately tied to its use, we can tell only a very little about the meaning of the term from Tarski’s use of it to exaggerate for dramatic effect.

Looking back over the last 2,500 years of use of the term “infinite,” three distinct senses stand out: actually infinite, potentially infinite, and transcendentally infinite. These will be discussed in more detail below, but briefly, the concept of potential infinity treats infinity as an unbounded or non-terminating process developing over time. By contrast, the concept of actual infinity treats the infinite as timeless and complete. Transcendental infinity is the least precise of the three concepts and is more commonly used in discussions of metaphysics and theology to suggest transcendence of human understanding or human capability.

To give some examples, the set of integers is actually infinite, and so is the number of locations (points of space) between London and Moscow. The maximum length of grammatical sentences in English is potentially infinite, and so is the total amount of memory in a Turing machine, an ideal computer. An omnipotent being’s power is transcendentally infinite.

For purposes of doing mathematics and science, the actual infinite has turned out to be the most useful of the three concepts. Using the idea proposed by Bolzano that was mentioned above, the concept of the actual infinite was precisely defined in 1888 when Richard Dedekind redefined the term “infinity” for use in set theory and Georg Cantor made the infinite, in the sense of infinite set, an object of mathematical study. Before this turning point, the philosophical community generally believed Aristotle’s concept of potential infinity should be the concept used in mathematics and science.

a. Actual, Potential, and Transcendental Infinity

The Ancient Greeks generally conceived of the infinite as formless, characterless, indefinite, indeterminate, chaotic, and unintelligible. The term had negative connotations and was especially vague, having no clear criteria for distinguishing the finite from the infinite. In his treatment of Zeno’s paradoxes about infinite divisibility, Aristotle (384-322 B.C.E.) made a positive step toward clarification by distinguishing two different concepts of infinity, potential infinity, and actual infinity. The latter is also called complete infinity and completed infinity. The actual infinite is not a process in time; it is an infinity that exists wholly at one time. By contrast, Aristotle spoke of the potentially infinite as a never-ending process over time, but which is finite at any specific time.

The word “potential” is being used in a technical sense. A potential swimmer can learn to become an actual swimmer, but a potential infinity cannot become an actual infinity. Aristotle argued that all the problems involving reasoning with infinity are really problems of improperly applying the incoherent concept of actual infinity instead of the coherent concept of potential infinity. (See Aristotle’s Physics, Book III, for his account of infinity.)

For its day, this was a successful way of treating Zeno’s Achilles paradox since, if Zeno had confined himself to using only potential infinity, he would not have been able to develop his paradoxical argument. Here is why. Zeno said that to go from the start to the finish line, the runner must reach the place that is halfway-there, then after arriving at this place he still must reach the place that is half of that remaining distance, and after arriving there he again must reach the new place that is now halfway to the goal, and so on. These are too many places to reach because there is no end to these places since for any one there is another. Zeno made the mistake, according to Aristotle, of supposing that this infinite process needs completing when it really doesn’t; the finitely long path from start to finish exists undivided for the runner, and it is Zeno the mathematician who is demanding the completion of such a process. Without that concept of a completed infinite process there is no paradox.

Although today’s standard treatment of the Achilles paradox disagrees with Aristotle and says Zeno was correct to use the concept of a completed infinity and to imply the runner must go to an actual infinity of places in a finite time, Aristotle had so many other intellectual successes that his ideas about infinity dominated the Western world for the next two thousand years.

Even though Aristotle promoted the belief that “the idea of the actual infinite−of that whose infinitude presents itself all at once−was close to a contradiction in terms…,” (Moore 2001, 40) during those two thousand years, others did not treat it as a contradiction in terms. Archimedes, Duns Scotus, William of Ockham, Gregory of Rimini, and Leibniz made use of it. Archimedes used it, but had doubts about its legitimacy. Leibniz used it but had doubts about whether it was needed.

Here is an example of how Gregory of Rimini argued in the fourteenth century for the coherence of the concept of actual infinity:

If God can endlessly add a cubic foot to a stone—which He can—then He can create an infinitely big stone. For He need only add one cubic foot at some time, another [cubic foot] half an hour later, another a quarter of an hour later than that, and so on ad infinitum. He would then have before Him an infinite stone at the end of the hour. (Moore 2001, 53)

Leibniz envisioned the world as being an actual infinity of mind-like monads, and in (Leibniz 1702) he freely used the concept of being infinitesimally small in his development of the calculus in mathematics.

The term “infinity” that is used in contemporary mathematics and science is based on a technical development of this earlier, informal concept of actual infinity. This technical concept was not created until late in the 19th century.

b. The Rise of the Technical Terms

In the centuries after the decline of ancient Greece, the word “infinite” slowly changed its meaning in Medieval Europe. Theologians promoted the idea that God is infinite because He is limitless, and this at least caused the word “infinity” to lose its negative connotations. Eventually, during the Medieval Period, the word had come to mean endless, unlimited, and immeasurable–but not necessarily chaotic. The question of its intelligibility and conceivability by humans was disputed.

The term actual infinity is now very different. There are actual infinities in the technical, post-1880s sense, which are neither endless, unlimited, nor immeasurable. A line segment one meter long is a good example. It is not endless because it is finitely long, and it is not a process because it is timeless. It is not unlimited because it is limited by both zero and one. It is not immeasurable because its length measure is one meter. Nevertheless, the one-meter line is infinite in the technical sense because it has an actual infinity of sub-segments, and it has an actual infinity of distinct points. So, there definitely has been a conceptual revolution.

This can be very shocking to those people who are first introduced to the technical term “actual infinity.” It seems not to be the kind of infinity they are thinking about. The crux of the problem is that these people really are using a different concept of infinity. The sense of infinity in ordinary discourse these days is either the Aristotelian one of potential infinity or the medieval one that requires infinity to be endless, immeasurable, and perhaps to have connotations of perfection or inconceivability. This article uses the name transcendental infinity for the medieval concept although there is no generally accepted name for the concept. A transcendental infinity transcends human limits and detailed knowledge; it might be incapable of being described by a precise theory. It might also be a cluster of concepts rather than a single one.

Those people who are surprised when first introduced to the technical term “actual infinity” are probably thinking of either potential infinity or transcendental infinity, and that is why, in any discussion of infinity, some philosophers will say that an appeal to the technical term “actual infinity” is changing the subject. Another reason why there is opposition to actual infinities is that they have so many counter-intuitive properties. For example, consider a continuous line that has an actual infinity of points. A single point on this line has no next point! Also, a one-dimensional continuous curve can fill a two-dimensional area. Equally counterintuitive is the fact that some actually infinite numbers are smaller than other actually infinite numbers. Looked at more optimistically, though, most other philosophers will say the rise of this technical term is yet another example of how the discovery of a new concept has propelled civilization forward.

Resistance to the claim that there are actual infinities has had two other sources. One is the belief that actual infinities cannot be experienced. The other is the belief that use of the concept of actual infinity leads to paradoxes, such as Zeno’s. In order to solve Zeno’s Paradoxes, the standard solution makes use of calculus. The birth of the new technical definition of actual infinity is intimately tied to the development of calculus and thus to properly defining the mathematician’s real line, the linear continuum. The set of real numbers in their standard order was given the name “the continuum” or “the linear continuum” because it was believed that the real numbers fill up the entire number line continuously without leaving gaps. The integers have gaps, and so do the fractions.

Briefly, the argument for actual infinities is that science needs calculus; calculus needs the continuum; the continuum needs a very careful definition; and the best definition requires there to be actual infinities (not merely potential infinities) in the continuum.

Defining the continuum involves defining real numbers because the linear continuum is the intended model of the theory of real numbers just as the plane is the intended model of the theory of ordinary two-dimensional geometry. It was eventually realized by mathematicians that giving a careful definition to the continuum and to real numbers requires formulating their definitions within set theory. As part of that formulation, mathematicians found a good way to define a rational number in the language of set theory; then they defined a real number to be a certain pair of actually infinite sets of rational numbers. The continuum’s eventual definition required it to be an actually infinite collection whose elements are themselves infinite sets. The details are too complex to be presented here, but the curious reader can check any textbook in classical real analysis. The intuitive picture is that any interval or segment of the continuum is a continuum, and any continuum is a very special infinite set of points that are packed so closely together that there are no gaps. A continuum is perfectly smooth. This smoothness is reflected in there being a very great many real numbers between any two real numbers (technically a nondenumerable infinity between them).

Calculus is the area of mathematics that is more applicable to science than any other area. It can be thought of as a technique for treating a continuous change as being composed of an infinite number of infinitesimal changes. When calculus is applied to physical properties capable of change such as spatial location, ocean salinity, or an electrical circuit’s voltage, these properties are represented with continuous variables that have real numbers for their values. These values are specific real numbers, not ranges of real numbers and not just rational numbers. Achilles’ location along the path to his goal is such a property.

It took many centuries to rigorously develop the calculus. A very significant step in this direction occurred in 1888 when Richard Dedekind re-defined the term “infinity” and when Georg Cantor used that definition to create the first set theory, a theory that eventually was developed to the point where it could be used for embedding all classical mathematical theories. See the example in the Zeno’s Paradoxes article of how Dedekind used set theory and his new idea of “cuts” to define the real numbers in terms of infinite sets of rational numbers. In this way, additional rigor was given to the concepts of mathematics, and it encouraged more mathematicians to accept the notion of actually infinite sets. What this embedding requires is first defining the terms of any mathematical theory in the language of set theory, then translating the axioms and theorems of the mathematical theory into sentences of set theory, and then showing that these theorems follow logically from the axioms. (The axioms of any theory, such as set theory, are the special sentences of the theory that can always be assumed during the process of deducing the other theorems of the theory.)

The new technical treatment of infinity that originated with Dedekind in 1888 and was adopted by Cantor in his new set theory provided a definition of “infinite set” rather than simply “infinite.” Dedekind says an infinite set is a set that is not finite. The notion of a finite set can be defined in various ways. We might define it numerically as a set having n members, where n is some non-negative integer. Dedekind found an essentially equivalent definition of finite set (assuming the axiom of choice, which will be discussed later), but Dedekind’s definition does not require mentioning numbers:

A (Dedekind) finite set is a set for which there exists no one-to-one correspondence between it and one of its proper subsets.

By placing the finger-tips of your left hand on the corresponding fingertips of your right hand, you establish a one-to-one correspondence between the set of fingers of each hand; in that way, you establish that there is the same number of fingers on each of your hands, without your needing to count the fingers. More generally, there is a one-to-one correspondence between two sets when each member of one set can be paired off with a unique member of the other set, so that neither set has an unpaired member.

Here is a one-to-one correspondence between the natural numbers and its proper subset of even numbers, demonstrating that the natural numbers are infinite:

| 1 |

2 |

3 |

4 |

… |

| ↕ |

↕ |

↕ |

↕ |

|

| 2 |

4 |

6 |

8 |

… |

Informally expressed, any infinite set can be matched up to a part of itself; so the whole is equivalent to a part. This is a surprising definition because, before this definition was adopted, the idea that actually infinite wholes are equinumerous with some of their parts was taken as clear evidence that the concept of actual infinity is inherently paradoxical. For a systematic presentation of the many alternative ways to successfully define “infinite set” non-numerically, see (Tarski 1924).

Dedekind’s new definition of “infinite” is defining an actually infinite set, not a potentially infinite set because Dedekind appealed to no continuing operation over time. The concept of a potentially infinite set is then given a new technical definition by saying a potentially infinite set is a growing, finite subset of an actually infinite set. Cantor expressed the point this way:

In order for there to be a variable quantity in some mathematical study, the “domain” of its variability must strictly speaking be known beforehand through a definition. However, this domain cannot itself be something variable…. Thus this “domain” is a definite, actually infinite set of values. Thus each potential infinite…presupposes an actual infinite. (Cantor 1887)

The new idea is that the potentially infinite set presupposes an actually infinite one. If this is correct, then Aristotle’s two notions of the potential infinite and actual infinite have been redefined and clarified.

Two sets are the same if any member of one is a member of the other, and vice versa. Order of the members is irrelevant to the identity of the set, and to the size of the set. Two sets are the same size if there exists a one-to-one correspondence between them. This definition of same size was recommended by both Cantor and Frege. Cantor defined “finite” by saying a set is finite if there is a one-to-one correspondence with the set {1, 2, 3, …, n} for some positive integer n; and he said a set is infinite if it is not finite.

Cardinal numbers are measures of the sizes of sets. There are many definitions of what a cardinal number is, but what is essential for cardinal numbers is that two sets have the same cardinal just in case there is a one-to-one correspondence between them; and set A has a smaller cardinal number than a set B (and so set A has fewer members than B) provided there is a one-to-one correspondence between A and a subset of B, but B is not the same size as A. In this sense, the set of even integers does not have fewer members than the set of all integers, although intuitively you might think it does.

How big is infinity? This question does not make sense for either potential infinity or transcendental infinity, but it does for actual infinity. Finite cardinal numbers such as 0, 1, 2, and 3 are measures of the sizes of finite sets, and transfinite cardinal numbers are measures of the sizes of actually infinite sets. The transfinite cardinals are aleph-null, aleph-one, aleph-two, and so on; we represent them with the numerals ℵ0, ℵ1, ℵ2, …. The smallest infinite size is ℵ0 which is the size of the set of natural numbers, and it is said to be countably infinite (or denumerably infinite or enumerably infinite). The other alephs are measures of the uncountable infinities. However, calling a set of size ℵ0 countably infinite is somewhat misleading since no process of counting is involved. Nobody would have the time to count from 0 to ℵ0.

The set of even integers, the set of natural numbers and the set of rational numbers all can be shown to have the same size, but surprisingly they all are smaller than the set of real numbers. The set of points in the continuum and in any interval of the continuum turns out to be larger than ℵ0, although how much larger is still an open problem, called the continuum problem. A popular but controversial suggestion is that a continuum is of size ℵ1, the next larger size.

When creating set theory, mathematicians did not begin with the belief that there would be so many points between any two points in the continuum nor with the belief that for any infinite cardinal there is a larger cardinal. These were surprising consequences discovered by Cantor. To many philosophers, this surprise is evidence that what is going on is not invention but rather is discovery about mind-independent reality.

The intellectual community has always been wary of actually infinite sets. Before the discovery of how to embed calculus within set theory (a process that is also called giving calculus a basis in set theory), it could have been more easily argued that science does not need actual infinities. The burden of proof has now shifted, and the default position is that actual infinities are indispensable in mathematics and science, and anyone who wants to do without them must show that removing them does not do too much damage and has additional benefits. There are no known successful attempts to reconstruct the theories of mathematical physics without basing them on mathematical objects such as numbers and sets, but for one attempt to do so using second-order logic, see (Field 1980).

Here is why some mathematicians believe the set-theoretic basis is so important:

Just as chemistry was unified and simplified when it was realized that every chemical compound is made of atoms, mathematics was dramatically unified when it was realized that every object of mathematics can be taken to be the same kind of thing [namely, a set]. There are now other ways than set theory to unify mathematics, but before set theory there was no such unifying concept. Indeed, in the Renaissance, mathematicians hesitated to add x2 to x3, since the one was an area and the other a volume. Since the advent of set theory, one can correctly say that all mathematicians are exploring the same mental universe. (Rucker 1982, p. 64)

But the significance of this basis can be exaggerated. The existence of the basis does not imply that mathematics is set theory.

Paradoxes soon were revealed within set theory—by Cantor himself and then others—so the quest for a more rigorous definition of the mathematical continuum continued. Cantor’s own paradox surfaced in 1895 when he asked whether the set of all cardinal numbers has a cardinal number. Cantor showed that, if it does, then it doesn’t. Surely the set of all sets would have the greatest cardinal number, but Cantor showed that for any cardinal number there is a greater cardinal number. [For more details about this and the other paradoxes, see (Suppes 1960).] The most famous paradox of set theory is Russell’s Paradox of 1901. He showed that the set of all sets that are not members of themselves is both a member of itself and not a member of itself. Russell wrote that the paradox “put an end to the logical honeymoon that I had been enjoying.”

These and other paradoxes were eventually resolved satisfactorily by finding revised axioms of set theory that permit the existence of enough well-behaved sets so that set theory is not crippled [that is, made incapable of providing a basis for mathematical theories] and yet the axioms do not permit the existence of too many sets, the ill-behaved sets such as Cantor’s set of all cardinals and Russell’s set of all sets that are not members of themselves. Finally, by the mid-20th century, it had become clear that, despite the existence of competing set theories, Zermelo-Fraenkel’s set theory (ZF) was the best way or the least radical way to revise set theory in order to avoid all the known paradoxes and problems while at the same time preserving enough of our intuitive ideas about sets that it deserved to be called a set theory, and at this time most mathematicians would have agreed that the continuum had been given a proper basis in ZF. See (Kleene 1967, pp. 189-191) for comments on this agreement about ZF’s success and for a list of the ZF axioms and for a detailed explanation of why each axiom deserves to be an axiom.

Because of this success, and because it was clear enough that the concept of infinity used in ZF does not lead to contradictions, and because it seemed so evident how to use the concept in other areas of mathematics and science where the term “infinity” was being used, the definition of the concept of “infinite set” within ZF was claimed by many philosophers to be the paradigm example of how to provide a precise and fruitful definition of a philosophically significant concept. Much less attention was then paid to critics who had complained that we can never use the word “infinity” coherently because infinity is ineffable or inherently paradoxical.

Nevertheless, there was, and still is, serious philosophical opposition to actually infinite sets and to ZF’s treatment of the continuum, and this has spawned the programs of constructivism, intuitionism, finitism, and ultrafinitism, all of whose advocates have philosophical objections to actual infinities. Even though there is much to be said in favor of replacing a murky concept with a clearer, technical concept, there is always the worry that the replacement is a change of subject that has not really solved the problems it was designed for. More discussion of the role of infinity in mathematics and science continues in later sections of this article.

2. Infinity and the Mind

Can humans grasp the concept of the infinite? This seems to be a profound question. Ever since Zeno, intellectuals have realized that careless reasoning about infinity can lead to paradox and perhaps “defeat” the human mind.

Some critics of infinity argue not just that paradox can occur but that paradox is essential to, or inherent in, the use of the concept of infinity, so the infinite is beyond the grasp of the human mind. However, this criticism applies more properly to some forms of transcendental infinity rather than to either actual infinity or potential infinity. This is a consequence of the development of set theory as we shall see in a later section.

A second reason to believe humans cannot grasp infinity is that the concept must contain an infinite number of sub-concepts, which is too many for our finite minds. A counter to this reason is to defend the psychological claim that if a person succeeds in thinking about infinity, it does not follow that the person needs to have an actually infinite number of ideas in mind at one time.

A third reason to believe the concept of infinity is beyond human understanding is that to have the concept one must have some accurate mental picture of infinity. Thomas Hobbes, who believed that all thinking is based on imagination, might remark that nobody could picture an infinite number of grains of sand at once. However, most contemporary philosophers of psychology believe mental pictures are not essential to have a concept. Regarding the concept of dog, you might have a picture of a brown dog in your mind, and I might have a picture of a black dog in mine, but I can still understand you perfectly well when you say dogs frequently chase cats.

The main issue here is whether we can coherently think about infinity to the extent of being said to have the concept. Here is a simple argument that we can: If we understand negation and have the concept of finite, then the concept of infinite is merely the concept of not-finite. A second argument says the apparent consistency of set theory indicates that infinity in the technical sense of actual infinity is well within our grasp. And since potential infinity is definable in terms of actual infinity, it, too, is within our grasp.

Assuming that infinity is within our grasp, what is it that we are grasping? Philosophers disagree on the answer. In 1883, the father of set theory, Georg Cantor, created a formal theory of infinite sets as a way of clarifying the infinite. This was a significant advance, but the notion of set can be puzzling. If you understand that a pencil is on my desk, must you implicitly understand that a set containing a pencil is on my desk? Plus a set containing that set? And another set containing the set containing the set with the pencil, and so forth to infinity?

In regard to mentally grasping an infinite set or any other set, Cantor said:

A set is a Many which allows itself to be thought of as a One.

Notice the dependence of a set upon thought. Cantor eventually clarified what he meant and was clear that he did not want set existence to depend on mental capability. What he really believed is that a set is a collection of well-defined and distinct objects that exist independently of being thought of, but that might be thought of by a powerful enough mind.

3. Infinity in Metaphysics

There is a concept which corrupts and upsets all others. I refer not to Evil, whose limited realm is that of ethics; I refer to the infinite. —Jorge Luis Borges.

Shakespeare declared, “The will is infinite.” Is he correct or just exaggerating? Critics of Shakespeare, interpreted literally, might argue that the will is basically a product of different brain states. Because a person’s brain contains approximately 1027 atoms, these have only a finite number of configurations or states, and so, regardless of whether we interpret Shakespeare’s remark as implying that the will is unbounded (is potentially infinite) or the will produces an infinite number of brain states (is actually infinite), the will is not infinite. But perhaps Shakespeare was speaking metaphorically and did not intend to be taken literally, or perhaps he meant to use some version of transcendental infinity that makes infinity be somehow beyond human comprehension.

Contemporary Continental philosophers often speak that way. Emmanuel Levinas says the infinite is another name for the Other, for the existence of other conscious beings besides ourselves whom we are ethically responsible for. We “face the infinite” in the sense of facing a practically incomprehensible and unlimited number of possibilities upon encountering another conscious being. (See Levinas 1961.) If we ask what sense of “infinite” is being used by Levinas, it may be yet another concept of infinity, or it may be some kind of transcendental infinity. Another interpretation is that he is exaggerating about the number of possibilities and should say instead that there are too many possibilities to be faced when we encounter another conscious being and that the possibilities are not readily predictable because other conscious beings make free choices, the causes of which often are not known even to the person making the choice.

Leibniz was one of the few persons in earlier centuries who believed in actually infinite sets, but he did not believe in infinite numbers. Cantor did. Referring to his own discovery of the transfinite cardinals ℵ0, ℵ1, ℵ2, …. and their properties, Cantor claimed his work was revealing God’s existence and that these mathematical objects were in the mind of God. He claimed God gave humans the concept of the infinite so that they could reflect on His perfection. Influential German neo-Thomists such as Constantin Gutberlet agreed with Cantor. Some Jesuit math instructors claim that by taking a calculus course and set theory course and understanding infinity, students are getting closer to God. Their critics complain that these mystical ideas about infinity and God are too speculative.

When metaphysicians speak of infinity they use all three concepts: potential infinity, actual infinity, and transcendental infinity. But when they speak about God being infinite, they are usually interested in implying that God is beyond human understanding or that there is a lack of a limit on particular properties of God, such as God’s goodness and knowledge and power.

The connection between infinity and God exists in nearly all of the world’s religions. It is prominent in Hindu, Muslim, Jewish, and Christian literature. For example, in chapter 11 of the Bhagavad Gita of Hindu scripture, Krishna says, “O Lord of the universe, I see You everywhere with infinite form….”

Plato did not envision God (the Demi-urge) as infinite because he viewed God as perfect, and he believed anything perfect must be limited and thus not infinite because the infinite was defined as an unlimited, unbounded, indefinite, unintelligible chaos.

But the meaning of the term “infinite” slowly began to change. Over six hundred years later, the Neo-Platonist philosopher Plotinus was one of the first important Greek philosophers to equate God with the infinite−although he did not do so explicitly. He said instead that any idea abstracted from our finite experience is not applicable to God. He probably believed that if God were finite in some aspect, then there could be something beyond God and therefore God wouldn’t be “the One.” Plotinus was influential in helping remove the negative connotations that had accompanied the concept of the infinite. One difficulty here, though, is that it is unclear whether metaphysicians have discovered that God is identical with the transcendentally infinite or whether they are simply defining “God” to be that way. A more severe criticism is that perhaps they are just defining “infinite” (in the transcendental sense) as whatever God is.

Augustine, who merged Platonic philosophy with the Christian religion, spoke of God “whose understanding is infinite” for “what are we mean wretches that dare presume to limit His knowledge?” Augustine wrote that the reason God can understand the infinite is that “…every infinity is, in a way we cannot express, made finite to God….” [City of God, Book XII, ch. 18] This is an interesting perspective. Medieval philosophers debated whether God could understand infinite concepts other than Himself, not because God had limited understanding, but because there was no such thing as infinity anywhere except in God.

The medieval philosopher Thomas Aquinas, too, said God has infinite knowledge. He definitely did not mean potentially infinite knowledge. The technical definition of actual infinity might be useful here. If God is infinitely knowledgeable, this can be understood perhaps as meaning that God knows the truth values of all declarative sentences and that the set of these sentences is actually infinite.

Aquinas argued in his Summa Theologia that, although God created everything, nothing created by God can be actually infinite. His main reason was that anything created can be counted, yet if an infinity were created, then the count would be infinite, but no infinite numbers exist to do the counting (as Aristotle had also said). In his day this was a better argument than today because Cantor created (or discovered) infinite numbers in the late 19th century.

René Descartes believed God was actually infinite, and he remarked that the concept of actual infinity is so awesome that no human could have created it or deduced it from other concepts, so any idea of infinity that humans have must have come from God directly. Thus God exists. Descartes is using the concept of infinity to produce a new ontological argument for God’s existence.

David Hume, and many other philosophers, raised the problem that if God has infinite power then there need not be evil in the world, and if God has infinite goodness, then there should not be any evil in the world. This problem is often referred to as “The Problem of Evil” and has been a long-standing point of contention for theologians.

Spinoza and Hegel envisioned God, or the Absolute, pantheistically. If they are correct, then to call God infinite, is to call the world itself infinite. Hegel denigrated Aristotle’s advocacy of potential infinity and claimed the world is actually infinite. Traditional Christian, Muslim, and Jewish metaphysicians do not accept the pantheistic notion that God is at one with the world. Instead, they say God transcends the world. Since God is outside space and time, the space and time that he created may or may not be infinite, depending on God’s choice, but surely everything else he created is finite, they say.

The multiverse theories of cosmology in the early 21st century allow there to be an uncountable infinity of universes within a background space whose volume is actually infinite. The universe created by our Big Bang is just one of these many universes. Christian theologians often balk at the notion of God choosing to create this multiverse because the theory’s implication that, although there are so many universes radically different from ours, there also are an actually infinite number of ones just like ours. This implies there is an infinite number of indistinguishable copies of Jesus, each of whom has been crucified on the cross. This removal of the uniqueness of Jesus is apparently a removal of his dignity. Augustine had this worry about uniqueness when considering infinite universes, and he responded that “Christ died once for sinners….”

There are many other entities and properties that some metaphysician or other has claimed are infinite: places, possibilities, propositions, properties, particulars, partial orderings, pi’s decimal expansion, predicates, proofs, Plato’s forms, principles, power sets, probabilities, positions, and possible worlds. That is just for the letter p. Some of these are considered to be abstract objects, objects outside of space and time, and others are considered to be concrete objects, objects within, or part of, space and time.

For helpful surveys of the history of infinity in theology and metaphysics, see (Owen 1967) and (Moore 2001).

4. Infinity in Physical Science

From a metaphysical perspective, the theories of mathematical physics seem to be ontologically committed to objects and their properties. If any of those objects or properties are infinite, then physics is committed to there being infinity within the physical world.

Here are four suggested examples where infinity occurs within physical science. (1) Standard cosmology based on Einstein’s general theory of relativity implies the density of the mass at the center of a simple black hole is infinitely large (even though the black hole’s total mass is finite). (2) The Standard Model of particle physics implies the size of an electron is infinitely small. (3) General relativity implies that every path in space is infinitely divisible. (4) Classical quantum theory implies the values of the kinetic energy of an accelerating, free electron are infinitely numerous. These four kinds of infinities—infinite large, infinitely small, infinitely divisible, and infinitely numerous—are implied by theory and argumentation, and are not something that could be measured directly.

Objecting to taking scientific theories at face value, the 18th-century British empiricists George Berkeley and David Hume denied the physical reality of even potential infinities on the empiricist grounds that such infinities are not detectable by our sense organs. Most philosophers of the 21st century would say that Berkeley’s and Hume’s empirical standards are too rigid because they are based on the mistaken assumption that our knowledge of reality must be a complex built up from simple impressions gained from our sense organs.

But in the spirit of Berkeley’s and Hume’s empiricism, instrumentalists also challenge any claim that science tells us the truth about physical infinities. The instrumentalists say that all theories of science are merely effective “instruments” designed for explanatory and predictive success. A scientific theory’s claims are neither true nor false. By analogy, a shovel is an effective instrument for digging, but a shovel is neither true nor false. The instrumentalist would say our theories of mathematical physics imply only that reality looks “as if” there are physical infinities. Some realists on this issue respond that to declare it to be merely a useful mathematical fiction that there are physical infinities is just as misleading as to say it is mere fiction that moving planets actually have inertia or petunias actually contain electrons. We have no other tool than theory-building for accessing the existing features of reality that are not directly perceptible. If our best theories—those that have been well tested and are empirically successful and make novel predictions—use theoretical terms that refer to infinities, then infinities must be accepted. See (Leplin 2000) for more details about anti-realist arguments, such as those of instrumentalism and constructive empiricism.

a. Infinitely Small and Infinitely Divisible

Consider the size of electrons and quarks, the two main components of atoms. All scientific experiments so far have been consistent with electrons and quarks having no internal structure (components), as our best scientific theories imply, so the “simple conclusion” is that electrons are infinitely small, or infinitesimal, and zero-dimensional. Is this “simple conclusion” too simple? Some physicists speculate that there are no physical particles this small and that, in each subsequent century, physicists will discover that all the particles of the previous century have a finite size due to some inner structure. However, most physicists withhold judgment on this point about the future of physics.

A second reason to question whether the “simple conclusion” is too simple is that electrons, quarks, and all other elementary particles behave in a quantum mechanical way. They have a wave nature as well as a particle nature, and they have these simultaneously. When probing an electron’s particle nature it is found to have no limit to how small it can be, but when probing the electron’s wave nature, the electron is found to be spread out through all of space, although it is more probably in some places than others. Also, quantum theory is about groups of objects, not a single object. The theory does not imply a definite result for a single observation but only for averages over many observations, so this is why quantum theory introduces inescapable randomness or unpredictability into claims about single objects and single experimental results. The more accurate theory of quantum electrodynamics (QED) that incorporates special relativity and improves on classical quantum theory for the smallest regions, also implies electrons are infinitesimal particles when viewed as particles, while they are wavelike or spread out when viewed as waves. When considering the electron’s particle nature, QED’s prediction of zero volume has been experimentally verified down to the limits of measurement technology. The measurement process is limited by the fact that light or other electromagnetic radiation must be used to locate the electron, and this light cannot be used to determine the position of the electron more accurately than the distance between the wave crests of the light wave used to bombard the electron. So, all this is why the “simple conclusion” mentioned at the beginning of this paragraph may be too simple. For more discussion, see the chapter “The Uncertainty Principle” in (Hawking 2001) or (Greene 1999, pp. 121-2).

If a scientific theory implies space is a continuum, with the structure of a mathematical continuum, then if that theory is taken at face value, space is infinitely divisible and composed of infinitely small entities, the so-called points of space. But should it be taken at face value? The mathematician David Hilbert declared in 1925, “A homogeneous continuum which admits of the sort of divisibility needed to realize the infinitely small is nowhere to be found in reality. The infinite divisibility of a continuum is an operation which exists only in thought.” Hilbert said actual, transcendental infinities are real in mathematics, but not in physics. Many physicists agree with Hilbert. Many other physicists and philosophers argue that, although Hilbert is correct that ordinary entities such as strawberries and cream are not continuous, he is ultimately incorrect, for the following reasons.

First, the Standard Model of particles and forces is one of the best tested and most successful theories in all the history of physics. So are the theories of relativity and quantum mechanics. All these theories imply or assume that, using Cantor’s technical sense of actual infinity, there are infinitely many infinitesimal instants in any non-zero duration, and there are infinitely many point places along any spatial path. So, time is a continuum, and space is a continuum.

The second challenge to Hilbert’s position is that quantum theory, in agreement with relativity theory, implies that for any possible kinetic energy of a free electron there is half that energy−insofar as an electron can be said to have a value of energy independent of being measured to have it. Although the energy of an electron bound within an atom is quantized, the energy of an unbound or free electron is not. If it accelerates in its reference frame from zero to nearly the speed of light, its energy changes and takes on all intermediate real-numbered values from its rest energy to its total energy. But mass is just a form of energy, as Einstein showed in his famous equation E = mc2, so in this sense mass is a continuum as well as energy.

How about non-classical quantum mechanics, the proposed theories of quantum gravity that are designed to remove the disagreements between quantum mechanics and relativity theory? Do these non-classical theories quantize all these continua we’ve been talking about? One such theory, the theory of loop quantum gravity, implies space consists of discrete units called loops. But string theory, which is the more popular of the theories of quantum gravity in the early 21st century, does not imply space is discontinuous. [See (Greene 2004) for more details.] Speaking about this question of continuity, the theoretical physicist Brian Greene says that, although string theory is developed against a background of continuous spacetime, his own insight is that

[T]he increasingly intense quantum jitters that arise on decreasing scales suggest that the notion of being able to divide distances or durations into ever smaller units likely comes to an end at around the Planck length (10-33centimeters) and Planck time (10-43 seconds). …There is something lurking in the microdepths−something that might be called the bare-bones substrate of spacetime−the entity to which the familiar notion of spacetime alludes. We expect that this ur-ingredient, this most elemental spacetime stuff, does not allow dissection into ever smaller pieces because of the violent fluctuations that would ultimately be encountered…. [If] familiar spacetime is but a large-scale manifestation of some more fundamental entity, what is that entity and what are its essential properties? As of today, no one knows. (Greene 2004, pp. 473, 474, 477)

Disagreeing, the theoretical physicist Roger Penrose speaks about both loop quantum gravity and string theory and says:

…in the early days of quantum mechanics, there was a great hope, not realized by future developments, that quantum theory was leading physics to a picture of the world in which there is actually discreteness at the tiniest levels. In the successful theories of our present day, as things have turned out, we take spacetime as a continuum even when quantum concepts are involved, and ideas that involve small-scale spacetime discreteness must be regarded as ‘unconventional.’ The continuum still features in an essential way even in those theories which attempt to apply the ideas of quantum mechanics to the very structure of space and time…. Thus it appears, for the time being at least, that we need to take the use of the infinite seriously, particular in its role in the mathematical description of the physical continuum. (Penrose 2005, 363)

b. Singularities

There is a good reason why scientists fear the infinite more than mathematicians do. Scientists have to worry that someday we will have a dangerous encounter with a singularity, with something that is, say, infinitely hot or infinitely dense. For example, we might encounter a singularity by being sucked into a black hole. According to Schwarzschild’s solution to the equations of general relativity, a simple, non-rotating black hole is infinitely dense at its center. For a second example of where there may be singularities, there is good reason to believe that 13.8 billion years ago the entire universe was a singularity with infinite temperature, infinite density, infinitesimal volume, and infinite curvature of spacetime.

Some philosophers will ask: Is it not proper to appeal to our best physical theories in order to learn what is physically possible? Usually, but not in this case, say many scientists, including Albert Einstein. He believed that, if a theory implies that some physical properties might have or, worse yet, do have actually infinite values (the so-called singularities), then this is a sure sign of error in the theory. It’s an error primarily because the theory will be unable to predict the behavior of the infinite entity, and so the theory will fail. For example, even if there were a large, shrinking universe pre-existing the Big Bang, if the Big Bang were considered to be an actual singularity, then knowledge of the state of the universe before the Big Bang could not be used to predict events after the Big Bang, or vice versa. This failure to imply the character of later states of the universe is what Einstein’s collaborator Peter Bergmann meant when he said, “A theory that involves singularities…carries within itself the seeds of its own destruction.” The majority of physicists probably would agree with Einstein and Bergmann about this, but the critics of these scientists say this belief that we need to remove singularities everywhere is merely a hope that has been turned into a metaphysical assumption.

But doesn’t quantum theory also rule out singularities? Yes. Quantum theory allows only arbitrary large, finite values of properties such as temperature and mass-energy density. So which theory, relativity theory or quantum theory, should we trust to tell us whether the center of a black hole is or isn’t a singularity? The best answer is, “Neither, because we should get our answer from a theory of quantum gravity.” A principal attraction of string theory, a leading proposal for a theory of quantum gravity to replace both relativity theory and quantum theory, is that it eliminates the many singularities that appear in previously accepted physical theories such as relativity theory. In string theory, the electrons and quarks are not point particles but are small, finite loops of fundamental string. That finiteness in the loop is what eliminates the singularities.

Unfortunately, string theory has its own problems with infinity. It implies an infinity of kinds of particles. If a particle is a string, then the energy of the particle should be the energy of its vibrating string. Strings have an infinite number of possible vibrational patterns each corresponding to a particle that should exist if we take the theory literally. One response that string theorists make to this problem about too many particles is that perhaps the infinity of particles did exist at the time of the Big Bang but now they have all disintegrated into a shower of simpler particles and so do not exist today. Another response favored by string theorists is that perhaps there never were an infinity of particles nor a Big Bang singularity in the first place. Instead, the Big Bang was a Big Bounce or quick expansion from a pre-existing, shrinking universe whose size stopped shrinking when it got below the critical Planck length of about 10-35 meters.

c. Idealization and Approximation

Scientific theories use idealization and approximation; they are “lies that help us to see the truth,” to use a phrase from the painter Pablo Picasso (who was speaking about art, not science). In our scientific theories, there are ideal gases, perfectly elliptical orbits, and economic consumers motivated only by profit. Everybody knows these are not intended to be real objects. Yet, it is clear that idealizations and approximations are actually needed in science in order to promote genuine explanation of many phenomena. We need to reduce the noise of the details in order to see what is important. In short, approximations and idealizations can be explanatory. But what about approximations and idealizations that involve the infinite?

Although the terms “idealization” and “approximation” are often used interchangeably, John Norton (Norton 2012) recommends paying more attention to their difference by saying that, when there is some aspect of the world, some target system, that we are trying to understand scientifically, approximations should be considered to be inexact descriptions of the target system whereas idealizations should be considered to be new systems or parts of new systems that also are approximations to the target system but that contain reference to some novel object or property. For example, elliptical orbits are approximations to actual orbits of planets, but ideal gases are idealizations because they contain novel objects such as point-sized gas particles that are part of a new system that is useful for approximating the target system of actual gases.

Philosophers of science disagree about whether all appeals to infinity can be known a priori to be mere idealizations or approximations. Our theory of the solar system justifies our belief that the Earth is orbited by a moon, not just an approximate moon. The speed of light in a vacuum really is constant, not just approximately constant. Why then should it be assumed, as it often is, that all appeals to infinity in scientific theory are approximations or idealizations? Must the infinity be an artifact of the model rather than a feature of actual physical reality? Philosophers of science disagree on this issue. See (Mundy, 1990, p. 290).

There is an argument for believing some appeals to infinity definitely are neither approximations nor idealizations. The argument presupposes a realist rather than an antirealist understanding of science, and it begins with a description of the opponents’ position. Carl Friedrich Gauss (1777-1855) was one of the greatest mathematicians of all time. He said scientific theories involve infinities merely as approximations or idealizations and merely in order to make for easy applications of those theories, when in fact all real entities are finite. At the time, nearly everyone would have agreed with Gauss. Roger Penrose argues against Gauss’ position:

Nevertheless, as tried and tested physical theory stands today—as it has for the past 24 centuries—real numbers still form a fundamental ingredient of our understanding of the physical world. (Penrose 2004, 62)

Gauss’s position could be buttressed if there were useful alternatives to our physical theories that do not use infinities. There actually are alternative mathematical theories of analysis that do not use real numbers and do not use infinite sets and do not require the line to be dense. See (Ahmavaara 1965) for an example. Representing the majority position among scientists on this issue, Penrose says, “To my mind, a physical theory which depends fundamentally upon some absurdly enormous…number would be a far more complicated (and improbable) theory than one that is able to depend upon a simple notion of infinity” (Penrose 2005, 359). David Deutsch agrees. He says, “Versions of number theory that confined themselves to ‘small natural numbers’ would have to be so full of arbitrary qualifiers, workarounds and unanswered questions, that they would be very bad explanations until they were generalized to the case that makes sense without such ad-hoc restrictions: the infinite case.” (Deutsch 2011, pp. 118-9) And surely a successful explanation is the surest route to understanding reality.

In opposition to this position of Penrose and Deutsch, and in support of Gauss’ position, the physicist Erwin Schrödinger remarks, “The idea of a continuous range, so familiar to mathematicians in our days, is something quite exorbitant, an enormous extrapolation of what is accessible to us.” Emphasizing this point about being “accessible to us,” some metaphysicians attack the applicability of the mathematical continuum to physical reality on the grounds that a continuous human perception over time is not mathematically continuous. Wesley Salmon responds to this complaint from Schrödinger:

…The perceptual continuum and perceived becoming [that is, the evidence from our sense organs that the world changes from time to time] exhibit a structure radically different from that of the mathematical continuum. Experience does seem, as James and Whitehead emphasize, to have an atomistic character. If physical change could be understood only in terms of the structure of the perceptual continuum, then the mathematical continuum would be incapable of providing an adequate description of physical processes. In particular, if we set the epistemological requirement that physical continuity must be constructed from physical points which are explicitly definable in terms of observables, then it will be impossible to endow the physical continuum with the properties of the mathematical continuum. In our discussion…, we shall see, however, that no such rigid requirement needs to be imposed. (Salmon 1970, 20)

Salmon continues by making the point that calculus provides better explanations of physical change than explanations which accept the “rigid requirement” of understanding physical change in terms of the structure of the perceptual continuum, so he recommends that we apply Ockham’s Razor and eliminate that rigid requirement. But the issue is not settled.

d. Infinity in Cosmology

Let’s review some of the history regarding the volume of spacetime. Aristotle said the past is infinite because for any past time we can imagine an earlier one. It is difficult to make sense of his belief about the past since he means it is potentially infinite. After all, the past has an end, namely the present, so its infinity has been completed and therefore is not a potential infinity. This problem with Aristotle’s reasoning was first raised in the 13th century by Richard Rufus of Cornwall. It was not given the attention it deserved because of the assumption for so many centuries that Aristotle couldn’t have been wrong about time, especially since his position was consistent with Christian, Jewish, and Muslim theology which implies the physical world became coherent or well-formed only a finite time ago (even if past time itself is potentially infinite). However, Aquinas argued against Aristotle’s view that the past is infinite; Aquinas’ grounds were that Holy Scripture implies God created the world (and thus time itself) a finite time ago and that Aristotle was wrong to put so much trust in what we can imagine.

Unlike time, Aristotle claimed space is finite. He said the volume of physical space is finite because it is enclosed within a finite, spherical shell of visible, fixed stars with the Earth at its center. On this topic of space not being infinite, Aristotle’s influence was authoritative to most scholars for the next eighteen hundred years.

The debate about whether the volume of space is infinite was rekindled in Renaissance Europe. The English astronomer and defender of Copernicus, Thomas Digges (1546–1595) was the first scientist to reject the ancient idea of an outer spherical shell and to declare that physical space is actually infinite in volume and filled with stars. The physicist Isaac Newton (1642–1727) at first believed the universe’s material is confined to only a finite region while it is surrounded by infinite empty space, but in 1691 he realized that if there were a finite number of stars in a finite region, then gravity would require all the stars to fall in together at some central point. To avoid this result, he later speculated that the universe contains an infinite number of stars in an infinite volume. We now know that Newton’s speculation about the stability of an infinity of stars in an infinite universe is incorrect. There would still be clumping so long as the universe did not expand. (Hawking 2001, p. 9)

Immanuel Kant (1724–1804) declared that space and time are both potentially infinite in extent because this is imposed by our own minds. Space and time are not features of “things in themselves” but are an aspect of the very form of any possible human experience, he said. We can know a priori even more about space than about time, he believed; and he declared that the geometry of space must be Euclidean. Kant’s approach to space and time as something knowable a priori went out of fashion in the early 20th century. It was undermined in large part by the discovery of non-Euclidean geometries in the 19th century, then by Beltrami’s and Klein’s proofs that these geometries are as logically consistent as Euclidean geometry, and finally by Einstein’s successful application to physical space of non-Euclidean geometry within his general theory of relativity.

The volume of spacetime is finite at present if we can trust the classical Big Bang theory. [But do not think of this finite space as having a boundary beyond which a traveler falls over the edge into nothingness.] Assuming space is all the places that have been created since the Big Bang, then the volume of space is definitely finite at present, though it is huge and growing ever larger over time. Assuming this expansion will never stop, it follows that the volume of spacetime is potentially infinite but not actually infinite. For more discussion of the issue of the volume of spacetime, see (Greene 2011).

Einstein’s theory of relativity implies that all physical objects must travel at less than light speed (in a vacuum). Nevertheless, by exploiting the principle of time dilation and length contraction in his special theory of relativity, the time limits on human exploration of the universe can be removed. Assuming you can travel safely at any high speed under light speed, then as your spaceship approaches light speed, your trip’s distance and travel time become infinitesimally short. In principle, you have time on your own clock to cross the Milky Way galaxy, a trip that takes light itself 100,000 years as measured on an Earth clock.

5. Infinity in Mathematics

The previous sections of this article have introduced the concepts of actual infinity and potential infinity and explored the development of calculus and set theory, but this section probes deeper into the role of infinity in mathematics. Mathematicians always have been aware of the special difficulty in dealing with the concept of infinity in a coherent manner. Intuitively, it seems reasonable that if we have two infinities of things, then we still have an infinity of them. So, we might represent this intuition mathematically by the equation 2 ∞ = 1 ∞. Dividing both sides by ∞ will prove that 2 = 1, which is a good sign we were not using infinity in a coherent manner. In recommending how to use the concept of infinity coherently, Bertrand Russell said pejoratively:

The whole difficulty of the subject lies in the necessity of thinking in an unfamiliar way, and in realising that many properties which we have thought inherent in number are in fact peculiar to finite numbers. If this is remembered, the positive theory of infinity…will not be found so difficult as it is to those who cling obstinately to the prejudices instilled by the arithmetic which is learnt in childhood. (Salmon 1970, 58)

That positive theory of infinity that Russell is talking about is set theory, and the new arithmetic is the result of Cantor’s generalizing the notions of order and of size of sets into the infinite, that is, to the infinite ordinals and infinite cardinals. These numbers are also called transfinite ordinals and transfinite cardinals. The following sections will briefly explore set theory and the role of infinity within mathematics. The main idea, though, is that the basic theories of mathematical physics are properly expressed using the differential calculus with real-number variables, and these concepts are well-defined in terms of set theory which, in turn, requires using actual infinities or transfinite infinities of various kinds.

a. Infinite Sums

In the 17th century, when Newton and Leibniz invented calculus, they wondered what the value is of this infinite sum:

1/1 + 1/2 + 1/4 + 1/8 + ….

They believed the sum is 2. Knowing about the dangers of talking about infinity, most later mathematicians hoped to find a technique to avoid using the phrase “infinite sum.” Cauchy and Weierstrass eventually provided this technique two centuries later. They removed any mention of “infinite sum” by using the formal idea of a limit. Informally, the Cauchy-Weierstrass idea is that instead of overtly saying the infinite sum x1 + x2 + x3 + … is some number S, as Newton and Leibniz were saying, one should say that the sequence converges to S just in case the numerical difference between S and any partial sum is as small as one desires, provided that partial sum occurs sufficiently far out in the sequence of partial sums. More formally it is expressed this way:

If an infinite series of real numbers is x1 + x2 + x3 + …, and if the infinite sequence of its partial sums is s1, s2, s3, …, then the series converges to S if and only if for every positive number ε there exists an integer n such that, for all integers k > n, |sk – S| < ε.

This technique of talking about limits was due to Cauchy in 1821 and Weierstrass in the period from 1850 to 1871. The two drawbacks to this technique are that (1) it is unintuitive and more complicated than Newton and Leibniz’s intuitive approach that did mention infinite sums, and (2) it is not needed because infinite sums were eventually legitimized by being given a set-theoretic foundation.

b. Infinitesimals and Hyperreals

There has been considerable controversy throughout history about how to understand infinitesimal objects and infinitesimal changes in the properties of objects. Intuitively, an infinitesimal object is as small as you please but not quite nothing. Infinitesimal objects and infinitesimal methods were first used by Archimedes in ancient Greece, but he did not mention them in any publication intended for the public because he did not consider his use of them to be rigorous. Infinitesimals became better known when Leibniz used them in his differential and integral calculus. The differential calculus can be considered to be a technique for treating continuous motion as being composed of an infinite number of infinitesimal steps. The calculus’ use of infinitesimals led to the so-called “golden age of nothing” in which infinitesimals were used freely in mathematics and science. During this period, Leibniz, Euler, and the Bernoullis applied the concept. Euler applied it cavalierly (although his intuition was so good that he rarely if ever made mistakes), but Leibniz and the Bernoullis were concerned with the general question of when we could, and when we could not, consider an infinitesimal to be zero. They were aware of apparent problems with these practices in large part because they had been exposed by Berkeley.

In 1734, George Berkeley attacked the concept of infinitesimal as ill-defined and incoherent because there were no definite rules for when the infinitesimal should be and shouldn’t be considered to be zero. Berkeley, like Leibniz, was thinking of infinitesimals as objects with a constant value–as genuinely infinitesimally small magnitudes–whereas Newton thought of them as variables that could arbitrarily approach zero. Either way, there were coherence problems. The scientists and results-oriented mathematicians of the golden age of nothing had no good answer to the coherence problem. As standards of rigorous reasoning increased over the centuries, mathematicians became more worried about infinitesimals. They were delighted when Cauchy in 1821 and Weierstrass in the period from 1850 to 1875 developed a way to use calculus without infinitesimals, and at this time any appeal to infinitesimals was considered illegitimate, and mathematicians soon stopped using infinitesimals.

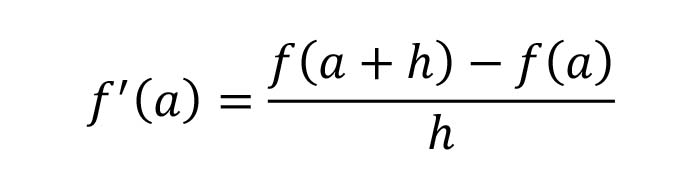

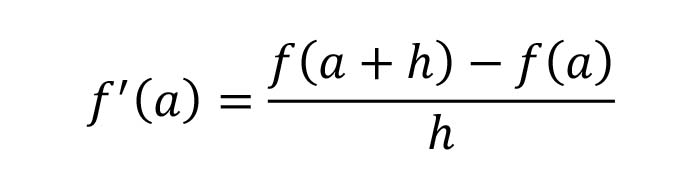

Here is how Cauchy and Weierstrass eliminated infinitesimals with their concept of limit. Suppose we have a function f, and we are interested in the Cartesian graph of the curve y = f(x) at some point a along the x-axis. What is the rate of change of f at a? This is the slope of the tangent line at a, and it is called the derivative f’ at a. This derivative was defined by Leibniz to be

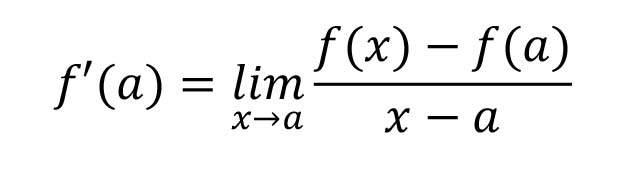

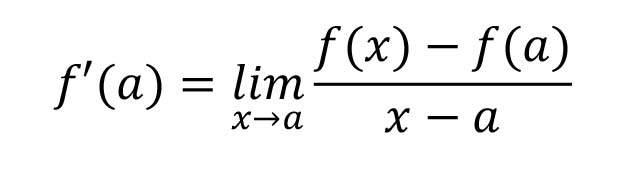

where h is an infinitesimal. Because of suspicions about infinitesimals, Cauchy and Weierstrass suggested replacing Leibniz’s definition of the derivative with

That is, f'(a) is the limit, as x approaches a, of the above ratio. The limit idea was rigorously defined using Cauchy’s well-known epsilon and delta method. Soon after the Cauchy-Weierstrass definition of derivative was formulated, mathematicians stopped using infinitesimals.

The scientists did not follow the lead of the mathematicians. Despite the lack of a coherent theory of infinitesimals, scientists continued to reason with infinitesimals because infinitesimal methods were so much more intuitively appealing than the mathematicians’ epsilon-delta methods. Although students in calculus classes in the early 21st century are still taught the unintuitive epsilon-delta methods, Abraham Robinson (Robinson 1966) created a rigorous alternative to standard Weierstrassian analysis by using the methods of model theory to define infinitesimals.

Here is Robinson’s idea. Think of the rational numbers in their natural order as being gappy with real numbers filling the gaps between them. Then think of the real numbers as being gappy with hyperreals filling the gaps between them. There is a cloud or region of hyperreals surrounding each real number (that is, surrounding each real number described nonstandardly). To develop these ideas more rigorously, Robinson used this simple definition of an infinitesimal:

h is infinitesimal if and only if 0 < |h| < 1/n, for every positive integer n.

|h| is the absolute value of h.

Robinson did not actually define an infinitesimal as a number on the real line. The infinitesimals were defined on a new number line, the hyperreal line, that contains within it the structure of the standard real numbers from classical analysis. In this sense, the hyperreal line is the extension of the reals to the hyperreals. The development of analysis via infinitesimals creates a nonstandard analysis with a hyperreal line and a set of hyperreal numbers that include real numbers. In this nonstandard analysis, 78+2h is a hyperreal that is infinitesimally close to the real number 78. Sums and products of infinitesimals are infinitesimal.

Because of the rigor of the extension, all the arguments for and against Cantor’s infinities apply equally to the infinitesimals. Sentences about the standardly-described reals are true if and only if they are true in this extension to the hyperreals. Nonstandard analysis allows proofs of all the classical theorems of standard analysis, but it very often provides shorter, more direct, and more elegant proofs than those that were originally proved by using standard analysis with epsilons and deltas. Objections by practicing mathematicians to infinitesimals subsided after this was appreciated. With a good definition of “infinitesimal” they could then use it to explain related concepts such as in the sentence, “That curve approaches infinitesimally close to that line.” See (Wolf 2005, chapter 7) for more about infinitesimals and hyperreals.

c. Mathematical Existence

Mathematics is apparently about mathematical objects, so it is apparently about infinitely large objects, infinitely small objects, and infinitely many objects. Mathematicians who are doing mathematics and are not being careful about ontology too easily remark that there are infinite-dimensional spaces, the continuum, continuous functions, an infinity of functions, and this or that infinite structure. Do these infinities really exist? The philosophical literature is filled with arguments pro and con and with fine points about senses of existence.

When axiomatizing geometry, Euclid said that between any two points one could choose to construct a line. Opposed to Euclid’s constructivist stance, many modern axiomatizers take a realist philosophical stance by declaring simply that there exists a line between any two points, so the line pre-exists any construction process. In mathematics, the constructivist will recognize the existence of a mathematical object only if there is at present an algorithm (that is, a step by step “mechanical” procedure operating on symbols that is finitely describable, that requires no ingenuity and that uses only finitely many steps) for constructing or finding such an object. Assertions require proofs. The constructivist believes that to justifiably assert the negation of a sentence S is to prove that the assumption of S leads to a contradiction. So, legitimate mathematical objects must be shown to be constructible in principle by some mental activity and cannot be assumed to pre-exist any such construction process nor to exist simply because their non-existence would be contradictory. A constructivist, unlike a realist, is a kind of conceptualist, one who believes that an unknowable mathematical object is impossible. Most constructivists complain that, although potential infinities can be constructed, actual infinities cannot be.

There are many different schools of constructivism. The first systematic one, and perhaps the most well-known version and most radical version, is due to L.E.J. Brouwer. He is not a finitist, but his intuitionist school demands that all legitimate mathematics be constructible from a basis of mental processes he called “intuitions.” These intuitions might be more accurately called “clear mental procedures.” If there were no minds capable of having these intuitions, then there would be no mathematical objects just as there would be no songs without ideas in the minds of composers. Numbers are human creations. The number pi is intuitionistically legitimate because we have an algorithm for computing all its decimal digits, but the following number g is not legitimate. It is the number whose nth digit is either 0 or 1, and it is 1 if and only if there are n consecutive 7s in the decimal expansion of pi. No person yet knows how to construct the decimal digits of g. Brouwer argued that the actually infinite set of natural numbers cannot be constructed (using intuitions) and so does not exist. The best we can do is to have a rule for adding more members to a set. So, his concept of an acceptable infinity is closer to that of potential infinity than actual infinity. Hermann Weyl emphasizes the merely potential character of these infinities:

Brouwer made it clear, as I think beyond any doubt, that there is no evidence supporting the belief in the existential character of the totality of all natural numbers…. The sequence of numbers which grows beyond any stage already reached by passing to the next number, is a manifold of possibilities open towards infinity; it remains forever in the status of creation, but is not a closed realm of things existing in themselves. (Weyl is quoted in (Kleene 1967, p. 195))